The Designer is Dead: Five Reasons to Go Beyond Intended Experiences

Games are designed to create particular mental and emotional states in their players. The Dark Souls games use difficulty to “give players a sense of accomplishment by overcoming tremendous odds”, Dead Rising’s replay-enforcing time limit and oddball weapon options encourage humorous experimentation, Far Cry 2’s unreliable weapons force players to improvise in chaotic battles, and so on. We call this the game’s “intended experience.”

Good games are those which successfully guide their players to worthwhile experiences, so the designer’s intent is key to a game’s quality. This leads some of us to conclude that designer intent should be elevated above player freedom - that players should be prevented from altering a game’s experience lest they ruin it for themselves.

“Decisions like [Dark Souls’s difficulty level, Dead Rising’s time limit, and Far Cry 2’s jamming weapons] might be controversial, but if they’re an integral part of the experience that the developer is trying to create, then the player shouldn’t feel like they’re entitled to be able to mess with this stuff through options, modes, and toggles. Because that would screw with the developer’s intentions and could end up ruining the game in the long run."

—Mark Brown, What Makes Celeste’s Assist Mode Special | Game Maker’s Toolkit (at 22 seconds) (to be fair, the rest of the video adds a lot of nuance to this position)

I strongly disagree with this. To me, the designer’s intent is the starting point and not the finish line. If we cling to it and discourage players from exploring any further, we rob it of most of its value. Here’s why.

1. The intent is sometimes evil.

Game designers, as a rule, are not Santa Claus. While there are folks out there hand-crafting games for the good people of the world out of pure generosity, designers (and developers, and artists, and writers, and testers, and distributors, etc.) generally need to eat. Consequently, most games of note are products or services that are designed in part to make money.

There’s a common idea that some creators make money in order to make games while other creators make games in order to make money. The poster child for “make games to make money” is Electronic Arts, whose well-documented love affair with loot boxes came to a head with the poorly-received Star Wars Battlefront II.

When a player complained on Reddit about the game’s exploitative structure and the fact that after paying $80 for the game it turned out that a character as iconic as Darth Vader was not available for play, a representative from EA defended the game with an appeal to designer intent: the reply comment opened with “The intent is to provide players with a sense of pride and accomplishment for unlocking different heroes.”

So naturally, the community rallied around EA’s vision and called the complainer an entitled whiner for rejecting the intended experience…

Oh wait, no, my bad - they immediately turned the EA rep’s response into the most downvoted comment in Reddit history and the backlash against the game was so strong that multiple governments got involved.

Star Wars Battlefront II makes it blindingly obvious that its designers don’t have the audience’s best interests at heart. And when the intended experience is clearly “the player opens their wallet again and shells out for loot boxes because otherwise it takes forty hours to unlock a single character” we have no problem rejecting that intent. But most games aren’t anywhere near this blatant. There are a lot of gray areas.

What about games that use “fun pain” to encourage players to pay for otherwise-free experiences, thereby creating a form of price discrimination that allows whales to subsidize free players, meaning that more people can experience the game than if it had a flat cost? What about games that create social obligation to keep players involved, resulting in a stronger player base and opportunity for high-quality shared experiences? What about games that directly reward engagement to make players feel good about coming back whether they want to or not?

How can we tell when the designer is actually trying to harm the audience for their own profit, versus when they’re just trying to do what they have to do to survive a crowded marketplace and share their art? Here’s a hint: it doesn’t actually matter. The motives don’t change the outcome. Regardless of why, the intended experience is designed to sacrifice some amount of player well-being for profit, directly through purchases or indirectly through engagement. In these cases, constraining ourselves to only follow designer intent is downright bad for us.

But okay, this is clearly a straw man, right? After all, the community did rally against Star Wars Battlefront II. Nobody’s out there saying the reason we shouldn’t have an easy mode in Candy Crush Saga is to protect its artistic vision. The real conversation is about games designed to enrich the audience, not exploit them - even if those games must be sold for money. So if we just focus on games where we can be confident the designer isn’t trying to exploit the player, is it safe for us to privilege those artists' intent and discourage people from subverting it?

Well, no. Because:

2. It’s bad accessibility.

Spyro Reignited Trilogy, a 2018 collection of remakes of the first three Spyro the Dragon games, launched with no subtitles for its cutscenes. When challenged on this, an Activision spokesperson’s response opened with “When [the developer] set out to make an awesome game collection, there were certain decisions that needed to be made throughout the process. The team remained committed to keep the integrity and legacy of Spyro that fans remembered intact.”

This wasn’t a bug; it was a choice by the creators. So when some people complained about the omission, naturally the community pointed out that reading subtitles would ruin the pacing of the dialog and distract from the animation and if you couldn’t hear the spoken dialog maybe the game just wasn’t for you…

Oh wait, no, my bad - they ragged on Activision for sacrificing accessibility to save money and hiding behind an arguably outright false claim that there is no “industry standard” for subtitles, and four months after launch subtitles were patched in.

Even those of us with perfect hearing are aware that deaf and hard-of-hearing people exist and like games too. Even if we believe that the ideal way to experience Spyro’s cutscenes is with both sight and sound, we recognize that’s not actually an option for everyone - and it’s better to use a simple accommodation like subtitles to share a similar experience than to slam the door in the face of people who can’t have exactly the intended experience. As a community, we seem to understand that accessibility accommodations are about letting people share in the experience, even if on the surface they look like they subvert the experience.

Hearing impairment is easy to understand. A developer can pop in some earplugs and see that yeah, their game is hard to understand without subtitles when you can’t clearly hear what the characters are saying. Other barriers to accessibility are less well-known or harder to understand if you’ve never been faced with them. Even if a designer watches all of Mark Brown’s “Designing for Disability” videos and makes sure to handle everything listed there, that won’t make their game accessible to absolutely everyone who might want to play it.

One thing designers can do as a final safety net is to ensure that no player has to be blocked by the game’s challenges if they don’t want to be. As I’ve written before, optional easy modes or even the ability to skip sections of gameplay are valuable ways to let as many people as possible share the experience to some degree. In an ideal world, it would be possible for each player to just fix whatever it is about the game that’s specifically blocking them; but a world in which they can lower general difficulty enough to get by anyway - even if that’s lowering it all the way to zero - is still better than one in which they can’t play the game at all.

To be clear, I recognize that accommodations aren’t free. Not every designer can afford to focus on accessibility. My argument is that if you wouldn’t argue against subtitles to preserve designer intent, then you shouldn’t argue against Easy Mode for that reason either.

But come on, this is a straw man too, right? Accessibility is hard and people understand that accommodations make sense as a compromise where feasible. Nobody’s really saying that people with disabilities shouldn’t be able to play Dark Souls. So if we just focus on cases where accessibility concerns are solved or irrelevant (and the designer isn’t trying to exploit the player), can we safely appeal to designer intent and tell people not to violate it?

Still no. Here’s why:

3. The designer doesn’t know you.

We just talked about how game designers have to take the player’s physical capabilities into account to ensure a game can be experienced as intended and how that’s an impossible ideal since people have a huge range of physical capabilities. But this is actually true for everything else about the player, too. Most games are intended to be played by people the designer doesn’t know personally. That means the designer has to design for an imagined audience. Ideally, most players will overlap with the imagined audience in most ways. But any way a real player deviates from the imagined player adds risk that the game won’t be experienced as intended.

SOMA is a survival horror game released by Frictional Games in 2015. The player character explores a mysterious facility, solving puzzles and learning about his situation while using stealth to avoid deadly monsters. Some players disliked the monsters and the effect they had on gameplay and would have preferred experiencing the game’s story without them, but as game director Thomas Grip explained, “The fear and tension that comes from those encounters are there in order to deliver a certain mood. The intention was to make sure players felt that this was a really unpleasant world to be in, and a lot of the game’s themes relied on evoking this.”

When a modder subverted this intent by releasing a mod called “Wuss Mode” that prevented the monsters from attacking, the community told him to suck it up and play the game right instead of trying to make it about himself…

Oh wait, no, my bad - enough players preferred the modified experience that Wuss Mode became the most-subscribed mod for SOMA on Steam, and Frictional Games patched the game with an improved and official “Safe Mode” option with similar effects.

Frictional wanted to create a certain mood of tension and fear, but SOMA’s original design didn’t create that mood in every player. Some players instead found that the monsters “looked cool, not creepy” and that their AI resulted in them being “roaming inconveniences rather than scares”. Simply put - people are different, and the same design can easily create very different experiences for different people. Adhering to a one-size-fits-all design philosophy and preventing players from tailoring their experience means that fewer players can get the intended experience. SOMA director Thomas Grip (the guy we just quoted about the intended experience) put it this way:

“I’m all for users modding the experience to suit their needs. The monsters in the game are quite divisive and you could argue that Wuss Mode intends to fix a design flaw. If you think of it that way, I guess you could see the mod as a critique directed at me. But I have a hard time getting upset about that. I just really like it when people take things into their own hands like this.”

Game designers can’t read your mind. They don’t know your skill level on any of the mechanics or challenges in their game. They don’t know what emotional reactions you’re going to have to their game’s content based on your experiences and associations. The designer may have an intended experience in mind, but they can’t know for sure what the game needs to provide to create that experience for any particular player. Why should the player privilege the designer’s guess over their own self-knowledge?

But surely this is also a straw man, right? We understand that different groups of players have different cultural knowledge and different experiences with varying game genres. Nobody’s denying that every game has a target audience and some players are necessarily outside of that audience due to their tastes and skills. So if we just look at situations where we’re confident the player is in the right demographic to receive the game’s intended experience (and there are no accessibility concerns and the designer isn’t trying to exploit the player), now can we say that players should only experience the game as the designer intended?

Nope! Because of this:

4. You don’t know the designer.

A lot of the time, we can only guess what the intended experience even is in the first place. Unless the designer comes right out and says what they were going for, all we have is the game itself and we can’t read it without injecting our own values and biases. Maybe there’s something about the game that we really like, so we assume it was core to the designer’s intent - but in fact, it was arbitrary or downright misinterpreted.

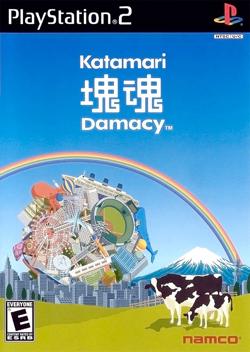

Katamari Damacy is a 2004 game in which the player character is tasked with rolling around a sticky ball to collect everyday objects into an ever-growing pile of assorted stuff until it’s suitable to be turned into a star. The game’s lighthearted quirkiness, colorful world, beautiful soundtrack, and simple-but-engaging gameplay made it a hit. Reviews described it as “pure joy right from the start”, having “the ability to evoke a sense of wonder”, or indeed “the happiest game I’ve ever played”.

But it turns out that game director Keita Takahashi had a very different intent. As reported by Eurogamer, Takahashi revealed at a 2009 Game Developers Conference that he’d created a world populated with fun objects for the player to collect as a cynical comment about society.

“If there are few objects, I feel lonely. If there are more objects, they will make things more colourful. But when they’re rolled up, they’re gone. I felt empty. I feel the same way about the disposable society. I think I successfully expressed my cynical stance towards the consumption society by making Katamari - but still I felt empty when the objects were gone.”

Since it was now public knowledge that Katamari Damacy was dark and cynical, people felt terrible about accidentally having fun and immediately stopped playing the game and its sequels rather than trample on what they now knew to be Takahashi’s intent…

Oh wait, no, my bad - the series kept going and even brought a remaster of the original to PC and Nintendo Switch in late 2018 which is scored in the eighties on Metacritic.

Katamari Damacy was popular for years before Takahashi revealed what he’d had in mind for it. While some players found loneliness at the game’s core, it seems that most experienced the opposite, finding it “like sitting in the same room as a happy, cheerful, and funny friend”. None of these players were wrong - they could have argued with each other about which experience was the intended one, but even now that we know the game was supposed to be depressing that doesn’t change the fact that a lot of people were genuinely uplifted by it.

If a game’s intended experience can be so lost in translation that most players are left with the opposite emotions, doesn’t that mean the intent is not the true heart of the game? Doesn’t that mean it would be folly to appeal to that intent to decide how people should experience the game?

But come on, this is clearly yet another straw man, right? Obviously some creators fail to express their vision. Nobody’s saying that a designer’s stated intent is more important than what the game actually is. So if we look at cases where the intent is embodied clearly (and the player is set up to receive it correctly, and there are no accessibility concerns, and the designer isn’t trying to exploit the player), now can we please admit that the intended experience is more important than the player’s whims?

Haha, of course not. And here is why:

5. It’s needlessly limiting.

Creation is collaborative and iterative.

StarCraft and Warcraft III are real-time strategy games released by Blizzard Entertainment in 1998 and 2002 respectively. Each came with a powerful map editor allowing players to create their own custom scenarios. One modder used StarCraft’s editing tools to create a map titled Aeon of Strife with gameplay very different from StarCraft’s standard - resembling more that of a particular mode in a 1998 game called Future Cop: LAPD. This map was then converted for Warcraft III by another modder, with the new map being called Defense of the Ancients (DotA for short).

Variations and improvements were added by a number of modders, but since this was just a pileup of subversions of designer intent nobody paid much attention to the results…

Oh wait, no, my bad - this directly caused the explosive popularization of the MOBA genre, with one of the prominent modders joining Valve Corporation and helping them release direct sequel Dota 2 while another became the first hire at Riot Games and helped them create spiritual successor League of Legends which for a time was the most-played PC game in North America and Europe.

This was only possible because of a spirit of playful experimentation and inventiveness. It’s because modders saw untapped potential in Future Cop: LAPD, in StarCraft, in Warcraft III, and even in each other’s work. If any link in that chain had been viewed as complete, closed, and final, we never would have gotten an incredibly popular set of games.

No more straw men. This is my true objection. Even if you’re sure you’re sure you’re correctly understanding and receiving a benevolently-designed experience - why stop there? Why elevate a designer above yourself when it comes to shaping your own experiences?

I’m not saying game design is easy - quite the opposite. The space of all possible designs is huge and most potential games wouldn’t be any fun at all. Designers are explorers who venture out into that space in search of interesting and enjoyable experiences. A finished game is a beacon in design space, pointing to a good spot so the rest of us may also be enriched by it. But if we flock to the beacon and just stay there, we are discarding most of its value. Good spots in design space tend to be clustered - finding one means there are probably many nearby. The beacon frees us to explore by giving us a great starting point. It shouldn’t be a prison.

Consider a board game. If you open the box and find just a board and some differently-colored pawns with no rules explaining what to do with them, it’d be hard to play a fun game unless you yourself happen to be a skilled game designer. But with rules included, you can understand why the board looks the way it does and comes with those particular pieces. The designer’s intent has guided you to a good spot in design space and you can now have a good time playing the game as suggested.

But once you know why the pawns are different colors, you can decide to swap some of them for coins because you’re colorblind or just want things more visually distinct. Once you know how the pawns move, you can experiment with an accelerated version where they move twice as many spaces because you don’t have much time to play or you think it might be more fun. The rules have increased the value of the game pieces by pointing to a high-quality spot in design space. That value increases more if you can then branch out to find nearby spots that serve your needs even better. Forcing you to stay only in the spot described by the written rules cuts off that value.

Games aren’t perfect jewels plucked from the heavens and delivered in pure form. Games are ideas filtered through human capabilities, cultural lenses, economic realities, and technological limitations. A good designer with good resources can create valuable experiences, but there are so many more experiences than any one designer can imagine, let alone implement.

Dark Souls without difficulty, Dead Rising without time limits, Far Cry 2 without weapon jamming - these would all inarguably be different from the games their respective designers set out to make. But that doesn’t mean they are automatically worse for every player. That doesn’t mean they aren’t worth experiencing. To pretend otherwise makes our world smaller, darker, and sadder.

I’d rather go exploring.

Postscript: Why is this even a question?

As I’ve touched on before, it used to be standard practice for games to provide mechanisms for players to easily subvert the intended experience and play around the boundaries. No one would argue that id Software intended for the original DOOM to be played with unlimited health and the ability to walk through walls, but the fact that the player can easily do so by typing in some short codes makes the game better, not worse.

Why did this change? How did we lose the expectation that when you buy a game, you can look under the hood and play around with what you find? It’s hard to pin a specific motive on an industry-spanning trend, but my sense is that it’s about money and not about commitment to artistic vision. It’s about changes in technology increasing the amount of control that game developers can enforce on their games, and the application of that control to make more money.

Game modification devices like the Game Genie became infeasible as game consoles moved from cartridges to discs, and as both console and PC games became internet-connected (and mobile gaming basically started that way) it became possible to stamp out unsanctioned player tinkering and patch any exploits found after launch. This is sometimes good - internet-enabled centralized control means that game service providers can essentially referee online matches and prevent players from violating agreed-upon rules. But it doesn’t improve the play experience to have a referee artificially limiting the options of players who are just messing around by themselves, or of players who’d like to agree to play with a different set of rules. It doesn’t make the game better to take away the ability to explore its design space to see what makes it tick and how it could be tweaked or even improved.

What it does do is let the publisher sell more things. Some of these effects are obvious - “fun pain” doesn’t drive microtransactions if players can just type a code to fill their stamina meters or unlock characters. But some are more subtle and indirect. Warcraft III’s powerful map editor enabled fans to jump-start an immensely popular game genre that earned a fortune - but that money didn’t go to Blizzard. It wasn’t until 2015 that Blizzard launched their own take on MOBAs, Heroes of the Storm, which had to compete against the games created by Blizzard’s own fans. It never reached their level of success and entered a “long-term sustainability” phase in late 2018 as Blizzard moved developers to other projects and canceled upcoming tournaments.

So perhaps it’s not surprising that when Blizzard released Diablo III in 2012, it required a constant online connection even when playing alone and completely prevented modding. This frustrated many players (and triggered consumer-rights violation investigations since the game was often unplayable due to connection problems), so why would Blizzard set things up this way? Because it also meant that players had constant access to the real-money auction house and no mod-based alternative way to check out rare equipment.

This is why industry heavies refer to player tinkering as “cheating” and normalize the idea that it should be prevented - and then turn around and sell experience boosters to speed up progression in a premium AAA title. This is why major publishers have increasingly migrated to games-as-a-service models and why they’re pushing for a streaming-based future. Because it means they can exert more control over the player’s experience. And that means they can make more money.

I don’t think that’s a good enough reason to normalize the idea that games are only meant to be played and not meant to be played with. I don’t want to hand control of my play time over to those who would reduce its quality in order to extract more of my money. But I think that’s how we got here.